A month or two ago I tried deploying an all-in-one Packstack OpenStack distribution, all in a single KVM virtual machine, for CloudForms testing. Using the instructions from the RDO project, I was able to deploy OpenStack without too many issues (hint: use flat networking if you experience configuration issues with neutron). However, I wanted to enable nested KVM for improved performance of the nova instances deployed, which the install instructions doesn’t really cover.

In order to get nested KVM working, I had to perform the following steps.

- Enable kernel module settings for nested KVM (processor-dependant) on the KVM host as well as the VM where Packstack will be deployed. Ensure the following lines are present (for Intel CPUs):

# cat << EOF > /etc/modprobe.d/kvm.conf options kvm-intel nested=1 options kvm-intel enable_shadow_vmcs=1 options kvm-intel enable_apicv=1 options kvm-intel ept=1 EOF

- Configure the Packstack VM’s nova.conf so that it is using KVM as its hypervisor instead of using qemu software emulation (which is really slow). The following line needs to be in /etc/nova/nova.conf in the [libvirt] section:

# grep ^virt_type /etc/nova/nova.conf virt_type=kvm

- Enable reverse path filtering on the KVM host and the Packstack VM, then reboot your KVM host and VM:

# cat << EOF > /etc/sysctl.d/98-rp-filter.conf net.ipv4.conf.default.rp_filter = 0 net.ipv4.conf.all.rp_filter = 0 EOF

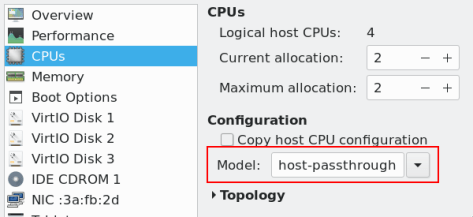

- Ensure that the cpu mode set for the Packstack VM is set as the following, either using virsh edit <domain> or virt-manager (while the VM is powered off):

# virsh dumpxml <domain>| grep "cpu mode" <cpu mode='host-passthrough' check='partial'/>

- To confirm that nested KVM is enabled after a reboot, see if /proc/cpuinfo displays the correct CPU flag (vmx or svm) inside the Packstack VM:

cat /proc/cpuinfo | egrep '(vmx|svm)' flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_ perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf tsc_known_freq pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid sse4_1 sse4_2 x2a pic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb invpcid_single pti ssbd ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid fsgsbas e tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm mpx rdseed adx smap clflushopt intel_pt xsaveopt xsavec xgetbv1 xsaves dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp fl ush_l1d

Once all the above steps have been implemented, nova will now be able to use KVM as a hypervisor inside the VM itself. However, I ran into an issue where I could not access any of the nested instances nova provisioned via nova boot or horizon, even after assigning a floating IP address to the instance.

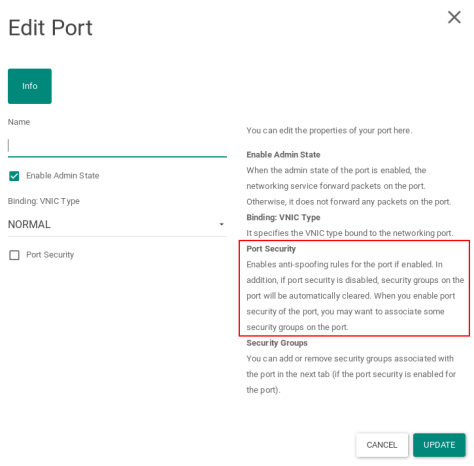

When I did a little digging though I found that for any nova instances deployed, ensure that port security is disabled when using nested KVM. Normally you would want this enabled but in a testing/lab environment its OK to disable this setting. MAC address spoofing is used in nested virtualisation environments so that traffic can be routed to the nested instances.

Afterwards I was able to access all my nested nova instances. If you want, you can perform even more levels of nesting but performance degrades significantly the more levels this is done. I haven’t found a very compelling use case to go more than 1 level of nesting.